Vision Paper

Launched at the Tallinn Digital Summit 2025, the Vision Paper shows how agentic AI will transform government and defines the contours of the Agentic State.

Executive Summary

AI agents — software that can perceive complex situations, reason through problems, and take autonomous action within defined boundaries — are already operating at commercial scale. Unlike previous waves of digitisation that automated existing processes, these systems can pursue outcomes, adapt through feedback, and coordinate across organisational boundaries.

Governments worldwide have consistently lagged in technology adoption, creating efficiency gaps and mounting citizen frustration. This pattern has left public institutions operating with outdated tools while citizens experience government as slower and less responsive than virtually every other service in their lives. Agentic AI, which is being adopted by industry leaders at unprecedented pace, stands to further widen this gap unless governments up their game.

A Framework for Understanding the Agentic State

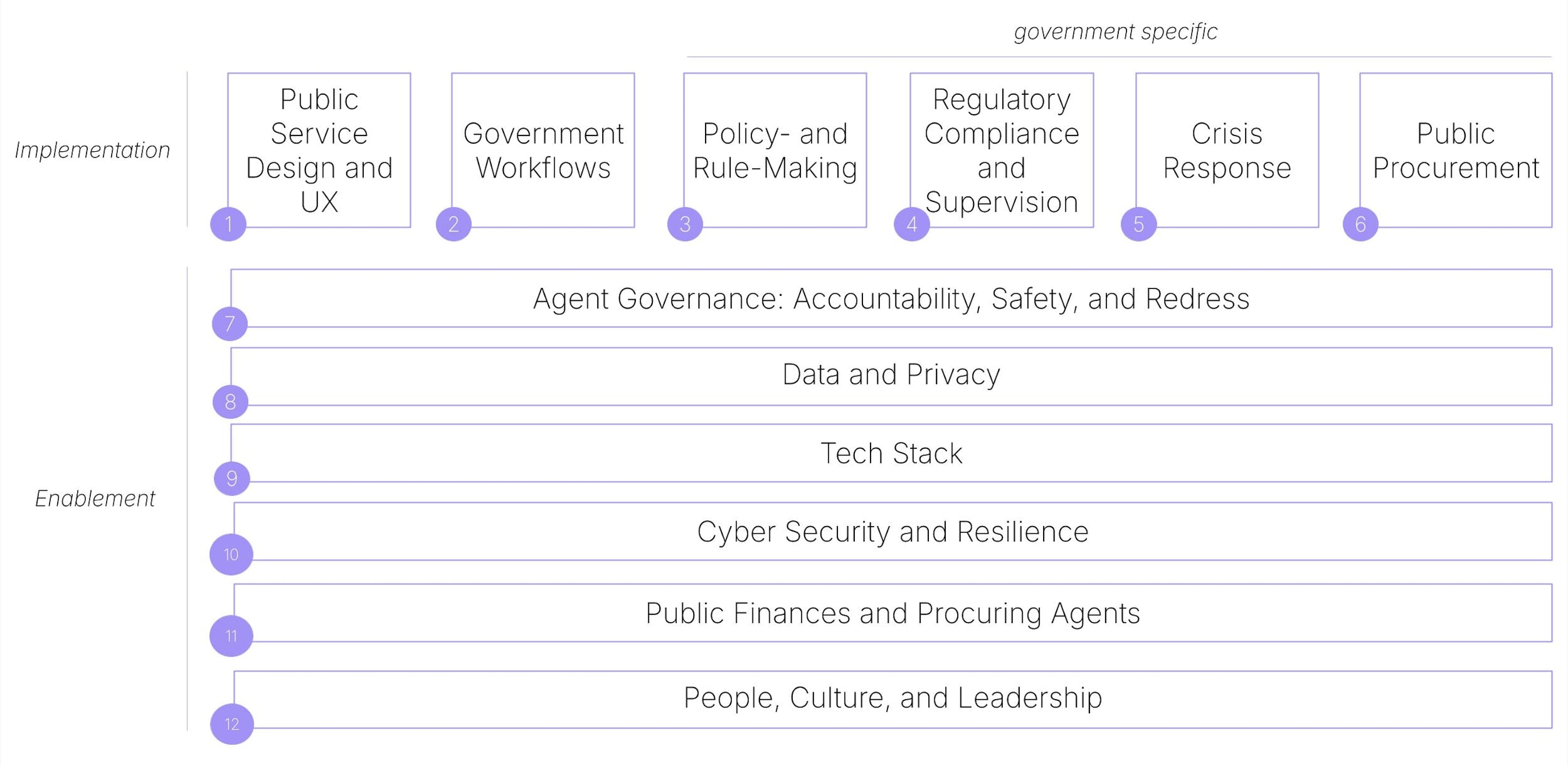

We analyse this transformation through twelve functional layers:

- Six implementation layers show where agents can deliver immediate value: public service design that becomes proactive and personalized; government workflows that self-orchestrate; policy-making that adapts continuously based on evidence; regulatory compliance that operates in real-time; crisis response that coordinates at machine speed; and procurement systems that negotiate autonomously within policy constraints.

- Six enablement layers address the structural requirements for successful deployment: governance frameworks for accountability and redress; data management and privacy protection; technical infrastructure spanning interfaces to compute resources; cybersecurity designed for autonomous systems; public finance models that handle variable, outcome-based costs; and organisational cultures capable of human-AI collaboration.

Together, when these twelve layers function in coordination, they create what we call the Agentic State: a comprehensive transformation of public administration where autonomous systems handle complexity and scale while human officials focus on strategic direction, political accountability, and decisions requiring judgment. This represents the most significant shift in how governments operate since the rise of modern bureaucracy.

The shift to the Agentic State represents more than efficiency gains. It enables fundamentally new forms of public administration, where services adjust dynamically to users’ needs, where policies adapt to real-world outcomes, and where government can operate at the speed and scale that modern challenges demand.

However, this transformation also faces substantial obstacles. Traditional bureaucratic structures optimise for procedural compliance rather than achieving outcomes. Accountability mechanisms assume civil servants operating at human speed. Existing technical infrastructure cannot support the real-time, cross-boundary coordination that agents require. Critically, workforce capabilities and organisational cultures must evolve to enable effective human-AI collaboration.

A Call for Deliberate Action

The technical building blocks for the Agentic State exist and are beginning to be proven at commercial scale. Private sector deployments demonstrate that autonomous systems can handle complex workflows, coordinate across organisational boundaries, and operate within defined constraints. Early government implementations show these capabilities translating to public administration contexts, with lower- and middle income countries able to leapfrog more established digital government champions.

Governments that postpone engagement with agentic capabilities face accumulating disadvantages. Citizens and businesses will increasingly deploy their own AI agents while government processes remain manual, creating friction that erodes trust and effectiveness. Private intermediaries will fill service delivery gaps, introducing new dependencies and inequities. Late-moving governments will implement systems designed by others according to commercial priorities rather than public values, losing the opportunity to shape how agentic capabilities serve democratic governance. The window for proactive choice is narrower than many government leaders recognise.

This paper argues for thoughtful experimentation and adoption. The governments that start this work now — acknowledging uncertainty and building incrementally — will develop the knowledge and capacity required for the transformation ahead.

Introduction: The Urgency of Acting Now

Why governments cannot afford to lag behind agentic AI transformation

| TL;DR: Governments cannot afford to wait on agentic AI. Every prior delay in tech adoption has left public institutions weaker, costlier, less trusted and, most of all, less relevant. This time the stakes are higher: agentic systems do not just digitise processes — they can reimagine government itself, enabling more responsive, accountable, and fair services. The imperative now is proactive leadership: governments must act deliberately and with urgency to shape this transformation in the public interest. |

The Cost of Being Late to Technological Progress

For decades, governments worldwide have consistently lagged behind in technology adoption. While enterprises have achieved dramatic productivity gains through cloud computing, data analytics, and agile development practices, most public administrations remain anchored to legacy systems, paper processes, and rigid procurement cycles. This pattern has created substantial efficiency gaps and spurred on mounting citizen frustration, who experience government as slower, less intuitive, and less responsive than virtually every other digital service in their lives.

The human cost has been equally significant. Public servants find themselves constrained by outdated tools that prevent effective service delivery. Talented technologists avoid government careers, creating a vicious cycle of limited digital capacity, while policy makers lack the real-time data and analytical skills and capabilities needed for evidence-based decision making.

Beyond Catch-Up: Agentic AI as Governments’ Chance to Move in Sync with Technology

Unlike previous waves of digitisation that primarily automated routine tasks or improved existing processes, agentic AI systems can perceive complex situations, reason through problems involving judgement and discretion, and take autonomous action to achieve specified outcomes within defined boundaries.

This capability shift proves particularly powerful for core government functions. Public administration involves numerous tasks requiring the processing of large information volumes, application of consistent criteria across thousands of cases, and coordination across multiple departments and stakeholders. AI agents excel at these activities while maintaining complete audit trails and enabling human oversight for complex cases requiring discretionary judgment.

The timing proves optimal for government adoption on multiple fronts. AI technologies have matured sufficiently to handle real-world government complexity whilst costs have decreased enough to enable widespread deployment. Citizens increasingly expect digital service experiences comparable to those they receive from leading private sector organisations, creating public demand for agentic government services. Perhaps most importantly, governments that act now can actively shape the development trajectory of agentic AI to serve public purposes rather than merely adapting to systems designed by others according to commercial priorities.

“The Digital State was our first step. The next is an Agentic State — one that understands people’s needs, offers solutions, and provides the right tools. Ukraine is already moving toward a model where just one request or a single voice message stands between a person’s need and the result. That is what an Agentic State looks like, where technology truly works for people. By realising this vision, Ukraine has already launched Diia.AI, the world’s first national digital agent that provides government services. This is a real step toward a state that operates faster, simpler, and more precisely. AI should become the foundation of public administration, from automating routine processes to delivering personalised services for every citizen. It is about speed, efficiency, and comfort in everyday interaction with the government, because the true purpose of AI is to solve people’s problems.”

- Mykhailo Fedorov, First Vice Prime Minister and Minister of Digital Transformation, Ukraine

The Stakes Are Higher This Time

This transformation differs fundamentally from previous technology adoption cycles in both scale and speed. The acceleration is staggering: the telephone took 75 years to reach 100 million users, the mobile phone 16 years, the internet seven, Facebook under five, TikTok nine months, ChatGPT just two. Agentic AI is arriving not over decades but over months, compressing the timeframe for institutional adaptation.

More critically, agentic AI represents a qualitative leap beyond previous automation waves. Where earlier technologies digitised existing processes, agentic systems can redesign workflows entirely. These technical capabilities enable entirely new forms of public service. Citizens could experience truly proactive government — systems that identify eligibility for benefits before applications are submitted, detect compliance issues before violations occur, and coordinate seamlessly across agencies to deliver integrated life-event services. Businesses could interact with governments through automated compliance reporting that adapts in real-time to regulatory changes whilst reducing administrative burden by orders of magnitude.

Early adopters from private sector organisations demonstrate these capabilities at commercial scale. Financial institutions deploy autonomous agents that process millions of transactions daily, detecting fraud patterns and managing compliance requirements across multiple jurisdictions. Manufacturing companies use intelligent agents to coordinate supply chains, production schedules, and quality control across global operations in real-time. E-commerce platforms deploy customer service agents that handle complex queries, process returns and refunds, and escalate only exceptional cases requiring human intervention.

“In the coming years, assistive AI will be one of the main drivers of economic growth. Public administration and the state must not fall behind. In the future, state services will no longer be something that citizens have to request; they will become an obligation on the state to deliver. Through AI, the state will be able to approach people with precisely the services they need.”

- Florian Tursky, former State Secretary for Digitalisation and Telecommunications, Austria

The Upside

The upside of agentic AI adoption and readiness in government will be a dramatic uplift to public sector performance. Jurisdictions whose public administration embrace agentic AI will have better, faster and more cost-effective government and will also do a better job at governance, offering their citizens and businesses less bureaucracy, faster decision-making, more transparency and accountability and better crisis-management. Finally, they will tilt the field in favor of faster private sector adoption of agentic capabilities, providing an overall uplift to the economy and empowering citizens.

These benefits are not abstract or theoretical — they will express themselves in very concrete measures of public sector performance and service quality, exacerbating the gap that already exists between best performers and laggards in digital government. Leading are already defining new KPIs that raise the bar of what public administration can achieve — faster services, higher satisfaction, and sharp reductions in routine effort. Estonia and Ukraine, for example, have embedded ambitious targets like those shown below into their national plans, signalling a step change in expectations for digital-era governance, presented in the table below.

| Metric | Target Indicator or Change |

|---|---|

| Time to complete most end-user digital services | 1 minute |

| Time to launch new digital services | 1 day |

| User satisfaction with public services | > 90% |

| Reduction in human effort on inter-departmental and public facing correspondence | 90% |

| % of needs resolved without human intervention | 95% of single-interaction requests |

A Call for Proactive Leadership

The deployment of agentic AI will come with inevitable risks and setbacks. Not everything will work. But the alternative — avoiding agentic systems entirely due to complexity or risk concerns — will prove even more costly:

- As private sector AI capabilities advance, governments that cannot provide comparable services risk being seen as obsolete and ineffective. This will further deepen the crisis of legitimacy that the state, challenged with providing public goods, faces in many countries.

- Citizens, businesses, and bad actors will increasingly leverage agentic AI. Where the state does not keep up, those actors will run circles around public administration, using agentic capabilities to achieve their goals.

- When the government fails to provide comprehensive or easy to use services, enterprises will fill the gap and create chargeable service around that, leading to increased cost for citizens and businesses when interacting with the government.

- When laggards do catch up, they will find themselves managing systems designed by others according to priorities that may not align with public purposes.

Government action in preparing for agentic AI should be urgent, not because the technology is perfect or potential future risks are comprehensively understood, but because the cost of delay exceeds the cost of early, thoughtful experimentation.

The choice is clear: lead the transformation or be transformed by it. The window for proactive choice may be narrower than many government leaders recognise. With this initiative, we hope to build towards enabling governments to become proactive.

“The Agentic State makes the stakes crystal clear, and it should be setting the agenda in the US for the bold reforms. It should be required reading for policymakers and advocates in all domains.”

- Jennifer Pahlka, former Deputy Chief Technology Officer, United States

Understanding Agentic AI

AI systems that can perceive, reason, and act can transform how all organisations operate

| TL;DR: Agentic AI combines the ‘brain’ of reasoning systems (LLMs) with the ‘hands’ of automation (RPA), creating software that can perceive, reason, and act with minimal human supervision. Unlike past tech waves that only digitised processes, agents pursue outcomes, adapt through feedback, and collaborate with humans and other agents. Agents exist on a spectrum of autonomy, often working cooperatively, and are already deployed across industries in research, coding, compliance, and customer service. For government, where structured, high-volume tasks dominate, the potential is especially significant: agents can shift public administration from doing things right to doing the right things, delivering faster, more accountable, and outcome-driven services. |

What Are AI Agents? Brain Meets Hands

Generative AI, with its capacity to create novel and human-like content (e.g. text, images, audio, code, or synthetic data), is now ‘eating’ tasks that involve complex cognition, creativity and understanding, such as content creation, communication and software development. In many executive, research and creative roles, generative AI is already seeing widespread adoption.

AI agents take this a step further by introducing systems that can not only generate and analyse data, but also perceive, reason, and act with minimal human intervention. These so-called agentic systems can manage end-to-end processes, learn, self-optimise, and collaborate with humans and other agents.

This distinguishes agents from all previous forms of software. While traditional automation follows a rigid, predefined process, an agent possesses a degree of autonomy. It is defined by its capacity to perceive inputs (telemetry, documents, UI, media), reason and plan a sequence of actions under defined policies, and act through tools and APIs to achieve its objective with minimal human intervention — with the possibility to adapt via feedback, improving over time through their own action. This shift from executing a process to achieving an outcome is the defining characteristic of the agentic paradigm.

To understand what makes this possible, we need to look at how two distinct technological streams have converged: the brain enabling reasoning and planning and the hands enabling action and execution, now combined in a single system.

The Brain Stream: The evolution from IBM's Deep Blue defeating Kasparov in 1997, which lacked broader intelligence, through neural networks and deep learning in the 2010s made feasible AI use for a range of predictive and analytic tasks, including translation and computer vision. Finally, the transformer architecture (2017) enabled Large Language Models like GPT-3 (2020) that could engage in dialogue, solve math problems, and generate code — but remained passive, suggesting actions without executing them.

The Hands Stream: The parallel evolution from simple scripts and macros in the early 2000s to sophisticated Robotic Process Automation (RPA) bots in the 2010s that could mimic human actions: clicking, typing, processing data across systems. Such tools reduced errors and freed workers from mundane tasks — but required deterministic and rule-based programming, lacking judgment.

When these two streams converge, we get an AI agent: a system that can both understand what needs to be done and has the means to actually do it. The agent can perceive its environment (via APIs and interfaces), reason about the query (using the LLM brain), and act by executing tasks through integrations such as MCP, APIs, or RPA tools. The agent paradigm represents the synthesis: understanding the goal, figuring out how to achieve it, and then actually doing it — bridging the gap between intelligence and action that neither GenAI nor RPA could bridge alone.

Levels of Agentic Progression

The term ‘AI agent’ describes a wide spectrum of systems with varying degrees of autonomy. Full autonomy is still the exception in most real systems, which benefit from human checkpoints and explicit guardrails. The useful lens is not a distinction agent vs human but understanding who decides what, with what authority, and how reversibly. Indeed, to better look at agency — and cut through market hype — it is useful to adopt a progression framework that categorizes agents by their capabilities. We use the taxonomy developed by Bornet and coauthors (2025).

In the automotive industry, six levels of driving automation can be identified, from Level 0 (fully manual) to Level 5 (fully autonomous) (SAE). Most vehicles today operate at Level 2 or 3, where automation handles many tasks but still requires human oversight. The same is true for AI agents. While fully autonomous systems capture public attention, the reality is that most agents currently in deployment operate with a human in the loop.

| Level | What Agents Do | Car Analogy | Main Technology | Real-world deployments |

|---|---|---|---|---|

| Level 0 — Manual (Human-only) | Humans perform all tasks; no automation. | Manual driving; no assistance. | Basic digital tools (spreadsheets, email), manual processing. | Paper/email workflows, manual data entry, spreadsheet ops. Production. |

| Level 1 — Rule-based automation | Simple automation follows fixed rules (RPA, scripts). | Basic cruise control maintains speed. | RPA, scripts, rule engines. | Email routing, payment STP, fraud rules engines. Production (ubiquitous). |

| Level 2 — Intelligent process automation | Automation + cognitive capabilities (ML/NLP/CV) with orchestration. | ADAS handles speed & steering with supervision. | ML, NLP, CV, RPA, process orchestration. | AP invoice extraction, claims triage, contact-center assist. Production (common). |

| Level 3 — Agentic workflows | Agents plan, reason, create content, and adapt within defined domains. | Highway auto-nav; human handles edge cases. | LLMs, memory systems, tool use, basic RL. | Copilots (support/coding/marketing), RAG analysts, automated ETL. Production (narrow, supervised) + many pilots. |

| Level 4 — Semi-autonomous agents | Agents act autonomously in bounded expertise; adapt strategies & learn. | Self-driving operates autonomously in specific conditions. | Advanced reasoning & planning, real-time adaptation, causal reasoning. | Driverless taxis, warehouse robotics, inspection drones, AIOps auto-remediation. Limited production in constrained environments; otherwise experimental. |

| Level 5 — Fully autonomous agents | Cross-domain learning and self-adaptation with no humans involved. | Fully autonomous cars drive anywhere in all conditions. | Sophisticated memory systems, advanced learning mechanisms, autonomy safety. | None today; research only. |

It is crucial to understand that higher levels are not always better. The appropriate level of autonomy depends entirely on the specific application's risk profile, complexity, and need for human oversight. There is a critical trade-off: as an agent's autonomy increases, direct human control and the predictability of its actions decrease. For sensitive domains, a highly reliable and auditable Level 3 agent is often preferable to a more autonomous but less predictable Level 4 system.

Cooperative Agents

Agent architectures increasingly rely on multi-agent systems rather than single, monolithic agents. Just as human organisations divide complex work among specialists who collaborate, multi-agent systems distribute tasks across specialized agents — each optimized for specific functions — that coordinate to achieve broader goals.

This division of labor offers several critical advantages. First, it improves reliability: when agents have clearly separated roles (such as planner, executor, and verifier), errors are caught earlier and do not cascade through the entire system. Second, it enables reusability: a document parsing agent built for one workflow can be reused in dozens of others without modification. Third, it reduces development costs dramatically, as teams can compose new capabilities from existing agent components rather than building from scratch.

What AI Agents Are Doing Today

AI agents are no longer just a vision: they are already operating in production across industries. What distinguishes these systems is that they do not just generate content or follow rules — they perceive inputs, reason about what to do next, act through digital tools or interfaces, and learn from outcomes, combining capabilities that used to be separate:

- Perceive: Agents can take in unstructured inputs — documents, interfaces, conversations, or telemetry data. For example, a healthcare scribe listens to a doctor–patient consultation and captures the relevant details for medical records.

- Reason: Using LLMs and other models, agents can plan and decide the next step in a process. Research assistants, for instance, can decompose a complex query into smaller questions, retrieve relevant papers, and propose a structured outline.

- Act: Instead of stopping at recommendations, agents can execute tasks through APIs, RPA, or direct computer control. Travel-booking agents, for example, not only suggest flights but also fill in forms and confirm reservations.

With feedback loops, agents can also refine their performance over time. A coding agent that receives corrections from a developer can improve future outputs and adapt to a project’s style. In practice, they often are set in cooperative workflows, where they interact with other agents and humans, forming small ecosystems rather than working in isolation.

Today’s deployments cluster in domains where tasks are bounded, success is measurable, and guardrails are clear. Most common categories include:

- Research and Analysis: Agents that sift through large volumes of documents, extract insights, and draft structured reports. They accelerate discovery but still rely on human oversight for accuracy.

- Coding and Software Development: Beyond autocomplete, coding agents can plan, write, and test programs, working as junior developers that boost productivity while humans make higher-level design choices.

- Computer Use Agents: Systems that can operate a digital environment directly — navigating forms, clicking through interfaces, and automating workflows even when APIs do not exist.

- Domain Specialists: Narrowly focused agents in areas like legal review, compliance monitoring, healthcare note-taking, IT operations, or logistics optimisation. By tackling well-defined processes, these agents achieve reliability and immediate ROI.

Across these examples, the pattern is clear: agents thrive when they augment human work in specific contexts, delivering speed and consistency while humans provide judgment and oversight. Their role is less about replacing people than about transforming workflows — bridging intelligence with action in ways that previous technologies could not.

Agents in Government: From Doing Things Right to Doing the Right Things

Agents are no longer a laboratory curiosity. In industry, they already run customer service, IT operations, cybersecurity, logistics, and documentation — showing that the technology is real and already delivering results when properly scoped. Governments can expect the comparable benefits that will increase with technological improvements.

Government operations are characterised by specific features that make them particularly well placed to benefit from agentic AI: high-volume, low-complexity tasks that follow structured decision-making frameworks. Processing benefit applications, issuing permits, conducting routine inspections, and managing citizen inquiries all involve similar patterns applied to thousands of cases with measurable outcomes and well-documented procedures. This creates ideal conditions for AI efficiency gains whilst maintaining quality and accountability.

Previous waves of automation focused on ‘doing things right’ — digitising existing processes to execute established procedures more efficiently. Agentic AI enables governments to focus on ‘doing the right things’ — defining desired outcomes and allowing intelligent systems to determine optimal approaches within defined constraints.

Many governments have developed overly process-driven cultures that prioritise procedural compliance over outcome achievement. Agentic AI inverts this relationship: rather than specifying every step in a workflow, governments can specify objectives, constraints, and success criteria, then allow agents to optimise approaches based on real-world feedback and learning. Adopting a culture of continuous improvement will also be needed to take advantage of these capabilities.

The prize is not automation for its own sake, but better services and outcomes with stronger accountability than many human-only processes offer today.

“Agentic AI is the next frontier of government digitisation and modernisation — ultimately redefining the relationship between the state, citizens, and organisations. The UAE government will approach this with the same ambition and vision we brought to previous waves of transformation. We are eager to advance this agenda and contribute to the international exchange that will help all governments navigate this transformation successfully.”

- H.E. Mohamed Bin Taliah, Chief of Government Services, United Arab Emirates

The Agentic State: Our Thinking and Approach

A framework for understanding how government transforms through agentic AI across a set of functional layers

| TL;DR: The Agentic State will transform government across all its activities. We analyse this transformation through 12 layers: implementation layers show where agents can deliver immediate, visible value to citizens, businesses, and government operations, while enablement layers address the broader structural requirements that must function effectively for agentic applications to be successfully deployed whilst preserving political accountability and public trust. Together, they mark the most significant shift in public administration since the rise of bureaucracy — moving from rule-based procedures to outcome-driven governance. |

Defining the Agentic State

The Agentic State represents a fundamental reimagining of how government operates. It is not just a matter of deploying individual AI agents within existing structures; it means a comprehensive transformation of public administration itself.

In the Agentic State, human judgement and activity is enhanced by agentic systems that can completely handle the complexity and scale of traditional bureaucratic processes. This frees up human officials to focus more on the strategic, political, and creative aspects of governance which require distinctly human capabilities.

Agentic AI can go far beyond the automation of existing processes compared to previous technology trends, and therefore enables entirely new forms of public administration. Where traditional systems follow predetermined rules and break down at edge cases, agentic systems can reason through ambiguity, learn from results, and operate with genuine autonomy within their assigned scope. This allows for a shift from process-driven bureaucracy towards outcome-driven organisation and represents the most significant transformation in public administration since the introduction of modern bureaucracy itself.

The Agentic State is not a distant or utopian future vision but an immediate strategic imperative. The technologies required are already commercially available and become increasingly proven at scale.

A Multi-Layer Framework: From Direct Applications to Foundational Requirements

Our thinking on the Agentic State is structured around multiple functional layers, driven by the recognition that whilst agentic applications are clearly valuable, far more must work properly for the Agentic State to take shape successfully.

We broadly divide the functional layers into those adding towards the application of agentic AI, and those that enable this:

Implementation Layers (1–6) represent where agentic capabilities deliver immediate, visible value to citizens, businesses, and government operations. These include public service design and user experience that citizens directly encounter, government workflows that improve administrative efficiency, policy- and rule-making processes that enhance decision quality, regulatory compliance and supervision that ensures oversight at scale, crisis response capabilities that enable rapid coordinated action, and procurement systems that transform how governments acquire goods and services.

Enablement Layers (7–12) address the broader structural requirements that must function effectively for agentic applications to be successfully deployed whilst preserving political accountability and public trust. These enablement conditions span agent governance frameworks for accountability and redress, data management and privacy protection, technical infrastructure, cybersecurity and resilience measures, public finance and procuring of agents, as well as organisational culture and leadership transformation.

Together, these layers in their totality create what we understand as the Agentic State. The framework can be read both horizontally and vertically. Horizontally, the application layers reveal where agentic AI can be deployed to transform government workflows and deliver value for citizens and businesses. Vertically, it becomes evident that any deployment of agents within an application layer requires careful alignment across all enablement layers, meaning that successful transformation demands coordinated progress across the entire system rather than isolated deployments.

“The idea of the Agentic State gives us a holistic view of what the capabilities AI agents connected to government services can provide. It also gives us a framework on how to approach the once again very complex problem of providing government services by applying digital possibilities. LLMs in combination with AI agent architecture have strong potential to improve digital inclusion which still remains a big challenge to many nations.”

- Jarkko Levasma, Government Chief Information Officer, Director General, Ministry of Finance, Finland

Naturally, progress across all layers towards the Agentic State will not always happen simultaneously nor at the same pace — particularly given the varying organisational capacity, political priorities, and technical readiness across different country contexts. Yet moving towards the Agentic State will require substantial strategic coordination across all layers, and this holistic framework serves to clarify how individual efforts contribute to the broader transformation and where each initiative fits within the overall architecture.

The subsequent chapters provide detailed analysis of each layer, examining current challenges, transformation opportunities, and implementation pathways specific to that domain.

A Shift in Governance

Beyond transformation in specific layers, the Agentic State will also bring along with it a more general shift in the dynamic of how government works, with inevitable friction between the status quo and new operational models enabled by AI agents. Some of these points of friction will include:

Rule-Based Decision Making vs. Outcome Optimisation: Bureaucracy applies predetermined rules to specific situations. Agentic systems optimise toward defined outcomes within constraint boundaries, potentially discovering approaches that no human rule-writer anticipated. When an agentic system finds a more effective way to achieve policy goals, bureaucratic rule-compliance can prevent adoption of superior approaches.

Hierarchical Authority vs. Network Coordination: Agentic systems operate through networks of specialised agents coordinating across organisational boundaries. Traditional hierarchies cannot provide the real-time oversight and coordination these systems require. A tax compliance agent might need to interact with agents from regulatory agencies, financial institutions, and international organisations — outside traditional bureaucratic chains of command.

Specialised Roles vs. Adaptive Capabilities: Functional specialisation of government bodies creates deep expertise within narrow domains but struggles with cross-cutting challenges. Agentic systems can dynamically reconfigure their capabilities based on task requirements, potentially making rigid role specialisation a barrier to effective human-AI collaboration.

Periodic Planning and Decision-Making vs. Continuous Learning and Iteration: Traditional bureaucracy rewards accumulated experience within established procedures. Agentic systems require human partners who can adapt continuously, learn new technical skills, and be comfortable with emergent rather than precedent-based decision-making.

Most fundamentally, traditional notions of good governance optimise for consistency and predictability rather than effectiveness and adaptation. This creates public administrations that can explain why they followed proper procedures even when those procedures produced poor outcomes. Agentic systems, by contrast, optimise directly for outcomes within defined constraints.

“While the future paradigm promises hyper-personalised services delivered at near-zero marginal cost, back-office workflows where bureaucracy ‘melts away’, and the capacity for ‘living laws’ that adapt in real-time to achieve policy targets, it requires a deep and deliberate investment in the foundations outlined in this report. A government that cannot holistically measure public value will be unable to define outcome-driven objectives for its AI agents. A government that lacks robust, trust-building governance will not have the public license to deploy autonomous systems. A government that cannot manage socio-technical risk will be unable to ensure these systems are safe and fair. And a government without common technical standards and an enterprise architecture will be incapable of orchestrating the complex, interconnected systems that define the Agentic State. Therefore, the adoption of this integrated framework is a necessary and urgent strategic step.”

- Chris Fechner, Chief Executive Officer, Digital Transformation Agency, Australia

1. Public Service Design and User Experience

From fragmented portals to proactive, personalised, and self-composing public services

| TL;DR: Agentic AI will transform public service design from fragmented portals into proactive, personalised, and self-composing services that flow seamlessly around citizens’ lives. Agents orchestrate complex needs across departments, anticipate issues before they arise, and adapt interactions across channels and contexts. The prize is a shift from bureaucratic transactions to outcome-driven, people-centred government — while the cost of inaction is growing frustration, inequality, and dependence on private intermediaries. |

Where We Are Now

The Agentic State represents a fundamental change from where citizens and businesses experience government as a collection of separate departments, each requiring different forms, credentials, and processes. The user experience (UX) remains transactional and brittle. Standard cases follow rigid workflows, while anything outside the norm results in manual handling and bureaucratic back-and-forth.

Even governments leading in public service design have digitised this fragmentation rather than eliminating it. Interfaces are smooth and the queues are digital, but users still bear the burden of understanding which department does what and managing interactions across organisational boundaries. While citizens expect to deal with a single front door for government, the reality is services that remain largely fragmented across departments, forcing people to repeat information and navigate silos.

Increasingly, efforts to design user-centric life events have become part of the standard digital government playbook. This approach organises public services around key moments in people's lives: having a child, starting a business, or retiring. While effective for capturing broad, predictable stages of life, the life events model misses the granular, messy, and often urgent needs and edge cases that define real user journeys. And the technical and coordination costs to manually (re)design life event services are significant, preventing this approach from scaling to all users and situations.

“Citizens do not distinguish between public and private digital experiences — they simply expect every interaction to be intuitive, fast, and genuinely helpful, whether they are ordering food, managing finances, or applying for permits. Government leaders may find the word ‘sexy’ uncomfortable when describing public services, but the truth is that government products are competing directly with commercial applications for citizens' attention, time, and trust — and losing badly when they prioritise compliance over usability, process over outcomes, or institutional convenience over user needs. The brand of government services is not a logo or color scheme. Government design carries special responsibility because these products do not just serve individual users; they influence social cohesion, democratic participation, and public trust in institutions, making design strategy a continuous process that must evolve with society's changing needs and cultural contexts while leaving lasting positive impact in citizens' real lives, not just digital experiences.”

- Valeriya Ionan, Advisor to the First Vice Prime Minister of Ukraine, Former Deputy Minister of Digital Transformation, Ukraine

Agentic Public Service Design and UX

In the Agentic State, public services flow directly around people’s lives, with service design occurring as a constant, dynamic process. At the core of agentic public services is an agent acting on behalf of the user, weaving interactions into daily life without requiring deliberate effort: needs are anticipated before they are spoken, solutions assemble themselves across departments, and interactions adapt instantly to context — whether through voice, text, or emerging interfaces. Services become continuous, personalised, and seamless, transforming government from a distant bureaucracy into an active presence that works with citizens rather than waiting for them.

This new mode of public service design and UX rests on four defining characteristics:

Hyper-Personalisation: Agentic interactions adapt automatically to individual circumstances, capabilities, and preferences. A recent graduate gets different tax guidance than a serial entrepreneur. New parents receive different benefit information than those caring for elderly relatives. Context drives customisation: the same services morph based on user needs. This characteristic is reinforced by the possibility of accessing services through personal agents that act as interfaces and orchestrators between users and services.

Self-Composition: Complete solutions assemble dynamically from distributed services. A citizen asking for ‘help after house damage’ triggers a coordinated response across insurance verification, emergency housing, building permits, contractor certification, utility restoration. Citizens state intent once; agents orchestrate everything else invisibly and handle bureaucratic complexity in the background.

Anticipation: Services detect needs and identify opportunities before citizens recognise them. If a family's income drops below assistance thresholds; relevant programmes contact them directly with pre-filled applications (and explain how and why the user was contacted proactively).

Multimodality and Omnichannel: Citizens access government anywhere, anyhow. Voice conversations for the visually impaired, chat interfaces for digital natives, AR overlays for spatial information. Start on the phone, continue on the laptop, finish through voice commands with full continuity and maintain context between different channels. Public services weave into other user journeys, for example visa applications during trip booking.

Evidence from Real-World Deployments

L1–L2 — Government Foundations. Many governments already have the building blocks for agentic public service design in place. The UK's GOV.UK Notify provides infrastructure for proactive communications. Singapore's SingPass creates authentication foundations for personalised services. These represent L1–L2 capabilities — structured automation ready for agentic enhancement.

L3–L4 — Private Sector Deployment. Agentic service delivery already operates at commercial scale, demonstrating technical feasibility:

- Salesforce Agentforce orchestrates complete customer service resolution across multiple systems, escalating only complex exceptions whilst maintaining full audit trails. At Heathrow Airport, Hallie achieves 90% chat resolution without human transfer, adapting responses to passenger contexts and coordinating across airport systems in real-time — classic L3 agentic interaction capability.

- Banking sector anticipation shows sophisticated capabilities. Leading banks deploy agents that detect unusual spending patterns, proactively contact customers about potential fraud, and automatically coordinate card replacement, transaction disputes, and merchant communications whilst adapting communication style to customer preferences and urgency levels — L4 semi-autonomous operation within financial regulatory constraints.

- Healthcare platform composition demonstrates complex service assembly. Patient apps like those deployed by Kaiser Permanente orchestrate appointment scheduling, prescription management, specialist referrals, and insurance coordination based on individual health profiles and treatment plans. The system adapts pathways based on patient responses and clinical outcomes without requiring patients to understand healthcare bureaucracy — L3–L4 service composition in highly regulated environments.

A few jurisdictions have begun to offer agentic interactions and services to users:

- Diia.AI. Ukraine is piloting a national AI-agent inside its government portal that executes services end-to-end directly in chat. The intent is to shift from forms to outcomes — users describe a need and the agent completes the workflow, with more services added over time.

- TAMM 3.0. Abu Dhabi’s latest release of its government app TAMM adds an AI assistant with conversational voice in Arabic and English, personalising and orchestrating access to a one-stop shop of 800+ services.

Implementation Dynamics

Several dynamics will drive implementation of agentic service delivery that will distinguish government transformation from other domains. We believe the following dynamics will be key:

Citizen Contact Ownership: Government services embedding in private platforms creates a fundamental choice about citizen relationships. When visa applications happen during trip booking, citizens may interact through private intermediaries rather than directly with governments. This is fundamentally about whether governments become an underlying service infrastructure for all services or remain a separate service provider. This requires conscious decisions about maintaining direct citizen relationships versus enabling seamless third-party integration. Governments must strike a balance between visibility and control on the one side and user convenience on the other.

User Preference for Level of Agentic Interaction: Service personalisation will evolve into agent personalisation, whereby the user chooses which agents support them to what extent and controls what services can be provided by which agents. Preferences for service delivery and freedom to choose the degree of agentic service provision become an important choice for citizens.

Back-Office Integration Requirements: Agentic UX requires a complete transformation of internal workflows. Self-composing services cannot operate on fragmented internal workflows or siloed data systems. When agents orchestrate ‘help after house damage’ across insurance, housing, permits, and utilities, every internal process — eligibility verification, approval workflows, payment systems, inter-agency coordination — must be redesigned for machine-speed coordination. Otherwise, agentic interfaces become shallow facades that break down when citizens need actual service delivery.

Transparency and Explainability: Government agents must operate with complete transparency where private sector applications tolerate opacity. Citizens need to understand not just decisions but reasoning, alternatives considered, and appeal processes. This requires structured explanations, complete decision logs, and clear escalation paths — legal requirements for due process that go beyond technical functionality. Governments may need to legislate to permit proactive outreach for specific services where the user has not explicitly given consent (e.g. to deliver benefits to vulnerable and underserved populations).

Platform and Market-Making Role: Government’s biggest contribution to agentic UX may lie not in developing its own agents but enabling users to ‘bring their own agent’, using personal AI assistants developed by private sector providers. The work needed for governments to act as platforms in this scenario is no less voluminous, including the provision of APIs, standards for quality, security and privacy, assurance and redress (see layers 7, 8, and 9). And governments will likely need to support developers with specialised interfaces for specific communities — disability services, compliance tools, immigrant applications.

Universal Access Obligations: Private companies optimise for profitable customers; governments must serve everyone. This demands channel parity across interfaces, multilingual capability beyond translation, accessibility beyond compliance, and digital inclusion pathways for those lacking devices or skills.

“Serving both California, the world’s fourth largest economy, and Montenegro, a country of half a million people, taught me that scale does not change the fundamental challenges governments face: calcified processes, limited capacity, and the urgent need for thoughtful leadership. Technology and AI are powerful tools, but they are not solutions on their own. Real change begins with a deep understanding of human problems, not the temptation to ‘sprinkle AI’ on broken systems. When you have a hammer, everything looks like a nail; and we need to be cautious not to treat AI as a hammer. The real test of AI is not how advanced it appears, but whether it improves lives in practice and reflects the needs of the people it is meant to serve. Done well, agentic AI can strengthen democracy by helping governments reflect plural values, make better decisions, and build systems that serve everyone, not just the privileged few.”

- Tamara Srzentić, former Minister of Public Administration, Digital Society and Media, Montenegro and former Deputy Director and Lead, California Office of Innovation and Pandemic Digital Response, United States

Cost of Inaction

Governments that fail to transform service delivery face accelerating citizen frustration as private services become increasingly intelligent whereas government remains fragmented and slow. The expectation gap erodes trust and political legitimacy.

Without agentic transformation, digital divides widen. Sophisticated citizens and businesses navigate complex systems effectively while vulnerable populations fall further behind, creating two-tier service delivery that contradicts equal treatment principles.

Most critically, private intermediaries fill the orchestration gap. Citizens increasingly rely on third-party platforms to navigate government complexity, creating dependencies that compromise equity, privacy, and control over public services.

Questions That Will Matter in the Future

- How can governments design self-composing services that work seamlessly across traditional agency boundaries while preserving accountability and political oversight? What institutional reforms enable service orchestration without undermining the checks and balances that prevent abuse of government power?

- Should governments provide universal baseline agents to ensure equitable access to agentic services, or can market-based approaches deliver adequate public benefit? What governance frameworks prevent agentic service delivery from creating new forms of digital inequality based on agent quality or sophistication?

- Who is liable in a situation where a citizen misses important legal obligations because of miscommunications or omission of information by an AI agent? What legal status does an agent acting on behalf of an individual carry: does the agent represent the individual or is a facsimile of the individual?

- How do we balance proactive government service with citizen privacy and autonomy? What safeguards prevent helpful anticipation of citizen needs from becoming intrusive surveillance or paternalistic decision-making that undermines individual agency?

- What constitutes appropriate transparency and explainability when AI agents make decisions affecting citizen services? How can government agents provide clear reasoning for their actions while operating at the speed and scale that makes agentic service delivery valuable?

- How do we measure success in agentic service delivery beyond traditional metrics of speed and efficiency? What performance frameworks capture improvements in citizen outcomes, equity, and political values alongside operational gains?

2. Government Workflows

From manual bottlenecks to self-orchestrating government operations

| TL;DR: Government workflows are the state’s operating system, yet today they remain digitised paper trails riddled with bottlenecks. Agentic AI transforms them into self-orchestrating, outcome-driven operations: agents integrate across departments, allocate resources intelligently, and continuously optimise processes in real time. The result is faster, more accurate, and more transparent government action, freeing civil servants to focus on judgment and oversight. The cost of inaction is severe: outdated workflows hard-code delay and inefficiency into everything the state does, leaving governments unable to govern effectively. |

Where We Are Now

Every government runs on workflows — the structured sequences of tasks that move information, apply rules, and generate decisions. These processes are the machinery that transforms political intent into administrative reality. Though largely invisible to citizens, workflows absorb the majority of governments’ time, budget, and staff capacity. They determine whether a benefits application takes weeks or months, whether a business license requires three forms or thirty, whether agencies coordinate seamlessly or work at cross-purposes. In short, workflows are what make governments function (and are frequently the source of dysfunction).

Currently, most government workflows operate as digitised versions of paper processes rather than being redesigned for digital capabilities. Information moves through email chains that replicate postal correspondence. Digital forms mirror paper layouts, requiring manual data entry even when information already exists in government databases. Approvals follow hierarchical chains designed for physical signatures. Even where processes are automated, users end up providing identical information to multiple agencies because systems cannot communicate despite operating on the same network. Processing times stretch for weeks not because work is complex, but because it waits in digital queues for human attention that adds no genuine value. The result is expensive inefficiency disguised as digital modernisation — technology deployed to accelerate old processes rather than enable new possibilities.

Agentic Government Workflows

Workflows in the old model optimise for doing things right: rigid steps, box-ticking, and procedural compliance. Agentic workflows optimise for doing the right things: delivering outcomes aligned with policy intent. In the Agentic State, agents dynamically assemble and execute workflows in real-time, drawing from across departments, data sources, and rule systems to create optimal pathways for each specific case — rather than forcing every case through the same rigid sequence. The promise is compelling: speed and precision without brittleness.

Four characteristics define this shift:

Outcome-Driven Orchestration: Agents align workflows with policy intent, dynamically sequencing tasks to achieve targets such as faster approvals, higher accuracy, or tighter compliance. A permit agent might be tasked with approving 90% of construction applications within ten days while rigorously enforcing zoning and climate standards. It verifies documents, checks rules, and issues draft permits — reconfiguring its approach as needed to stay on pace without compromising standards.

Intelligent Resource Allocation: High-volume, low-complexity cases are handled end-to-end by agents, freeing human expertise for complex or sensitive judgments. Routine approvals skip managerial layers; exceptional cases route directly to specialists; urgent matters leapfrog queues. Human capacity is no longer wasted on repetition but concentrated where discretion, negotiation, or creativity are essential.

Cross-System Integration Without Handoffs: Agents cut through silos by linking systems, agencies, and jurisdictions into seamless flows. A single business licence application triggers identity verification, budget checks, and registry updates across departments — all instantly, without clerical transfers. A citizen’s address change cascades automatically to tax, benefits, and voter rolls, eliminating today’s fragmented and error-prone handoffs.

Continuous Process Optimisation: Every workflow run becomes a feedback loop. Agents benchmark performance against defined goals — turnaround times, error rates, compliance accuracy — and adjust their methods in real time. Optimisation compounds rapidly: agents spot bottlenecks, collapse redundant steps, and refine sequencing across thousands of near-identical cases, driving down costs and delays. In low-volume, high-complexity workflows, learning focuses on surfacing better decision support: identifying which cases deserve escalation, what contextual data improves judgment, and how explanations can be made clearer. Across both types, processes do not remain static; they continuously evolve, so the more government works, the better and faster it becomes.

“The State of Goiás has been implementing AI agents across several administrative sectors, with a particular focus on digital document analysis and evaluation processes. These applications have achieved significant efficiency gains, optimising workflows and reducing the need for direct human intervention at various operational stages.”

- Adriano da Rocha Lima, General Secretary of Government, State of Goiás, Brazil

Evidence from Real-World Deployments

L1 — Digitised and Assisted Workflows (rules-based, human-led). The best digitized administrations mostly operate at this level (with many still stuck at L0). Systems execute predefined scripts: matching invoices to purchase orders by comparing reference numbers, routing benefit applications to appropriate departments based on category codes, generating standard HR checklists when new employees are hired. Forms move electronically rather than on paper, but non-deterministic steps require human approval.

L2 — AI-Assisted Classification and Routing (human-in-the-loop). Common in most newer ERP software. Machine learning systems analyse incoming documents, extract relevant data fields, and assign priority scores or risk categories. Tax and accounting systems automatically populate forms using previous year's data; inspection agencies rank facilities by risk algorithms; HR systems parse CVs and score candidates against job requirements. Humans review these machine-generated recommendations and make final decisions on every case.

In the state of Goiás, Brazil, agentic systems are being used to optimise several backend processes. In just one of these processes — the review of new innovative projects submitted for financing — the AI agent has already cut the average analysis time from one year to a single week, and reduced the required staffing by 33 people.

L3 — Agent-Assisted Orchestration (end-to-end flows under supervision). Agents coordinate multiple systems to execute complete processes, escalating only when predetermined thresholds are exceeded.

- Klarna: An AI system processed 2.3 million customer conversations monthly, resolving issues that previously required human agents. When customer satisfaction declined, the company reintroduced human agents for complex cases while maintaining AI for routine inquiries.

- Adecco: Automated systems screen 300 million job applications annually, conduct initial candidate conversations (57% occurring outside business hours), and create shortlists for human recruiters to review.

Government applications at L3 execute complete workflows: permit systems that sequence checks across multiple departments, validate requirements, and generate approval documents; benefit systems that gather evidence from multiple databases, calculate entitlements, and produce decisions with explanations.

L4 — Semi-Autonomous Operations (bounded autonomy with guardrails). Systems operate independently within predefined parameters and automatically stop when limits are reached.

- Amazon's procurement systems autonomously manage the purchasing process for standard supplies — identifying needs, selecting vendors, placing orders, and reconciling invoices — within preset spending limits per category, vendor, and time period. Human intervention is required only for exceptions or complex cases when thresholds are exceeded.

- Google's network traffic management automatically reroutes data flows during outages or congestion, reallocates server capacity based on demand, and scales resources up or down within predetermined cost and performance boundaries — all without human intervention unless system-wide parameters are breached.

- Singapore's smart traffic management will soon adjust signal timing, toll pricing, and lane configurations in real time based on traffic flows, automatically optimising for citywide throughput while respecting maximum toll rates and minimum service levels set by transport authorities.

“Improving government workflows is a primary objective, and must be addressed sector by sector. In Italy, we are trying to build concrete applications to enable the changes, including legislative and regulatory ones, needed to achieve a real impact on public services. In the healthcare sector, a priority is optimising waiting lists for diagnostic tests such as CT scans, X-ray scans, etc. To avoid no-shows, we are trying to integrate agents who, by monitoring habits, traffic, and weather conditions, can ensure that healthcare officials can use overbooking options or manage situations outside the healthcare sector (transportation, accessibility), thus helping citizens achieve the real goal: executing the CT scan! With these agents fully under human control, we are addressing challenges regarding their scope of competence, regulations on procedural responsibility, and privacy, transparency, and accountability tools.”

- Mario Nobile, Director General, Agency for Digital Italy

Implementation Dynamics

Transforming internal workflows into agentic systems is a technological, institutional, and organisational challenge. Several dynamics will determine whether agentic workflows deliver efficiency or stall in partial adoption:

Efficiency as a Political Mandate: Workflow reform has long been framed as ‘back-office modernisation,’ a secondary priority compared to frontline service delivery. In an Agentic State, this logic shifts. Without transforming workflows, efficiency gains elsewhere remain capped. Governments must treat workflow optimisation as a political priority — measured, funded, and mandated explicitly — if they are to unlock capacity for everything else.

The Performance Measurement Shift: Traditional workflow metrics focus on activity rather than outcomes — applications processed, meetings held, documents reviewed. Agentic systems enable extending beyond output metrics to measure what actually matters: problems solved, citizen satisfaction, time from need identification to resolution, cost per successful outcome. Managing this transition requires new metrics, new incentive structures, and political commitment to accountability for results rather than process compliance.

Redefining Roles for Civil Servants: As agents absorb high-volume, low-complexity tasks, the work of public officials shifts to supervision, exception handling, and cross-system coordination. This demands retraining, new performance evaluations, and recognition of supervisory judgment as central to public value creation. Without deliberate workforce design, efficiency gains may coexist with staff alienation and institutional resistance.

Management of Integration Complexity: Government workflows cross organisational, legal, and technical boundaries that resist simple integration. A comprehensive case management system must coordinate with separate budget systems, legal databases, external vendor platforms, and citizen-facing services — each with different data formats, security requirements, and operational constraints. Success requires standardised interfaces and protocols that enable interoperability without forcing wholesale replacement of existing systems.

Cost of Inaction

If workflows do not change, nothing changes. They are the state’s operating system — every decision, every payment, every approval runs through them. Outdated workflows hard-code delay into everything the government tries to do. Policy ambitions collapse into bottlenecks, and capacity is wasted on moving paper instead of solving problems.

The efficiency gains here are not marginal; they are existential. Transforming workflows unlocks speed, accuracy, and scale across the entire machinery of government as well as better citizen outcomes. Refusing to modernise means condemning public servants to clerical drudgery, citizens to frustration, and institutions to permanent incapacity. Governments that fail to act will not merely be inefficient — they will be unable to govern.

Questions That Will Matter in the Future

- How should governments measure success when workflows optimise for outcomes rather than steps? Can performance metrics capture improvements in citizen experience, service quality, and policy results alongside efficiency gains?

- How do agentic workflows avoid reinforcing bias in how cases are routed, prioritised, or resolved? What safeguards guarantee due process and accountability when decisions are made at machine speed? How can citizens have a right to challenge workflow outcomes in ways that are understandable and accessible? What safeguards ensure that agentic services do not unintentionally exclude or misrepresent marginalized groups due to biased data or limited digital access?

- When workflows span multiple agencies or jurisdictions, who resolves conflicts between competing priorities or legal requirements? How can integration reduce bureaucratic friction without undermining the checks and balances meant to prevent abuse of power, and do we need new inter-agency governance structures to oversee cross-boundary workflows?

- How can public servants transition from clerical processing to oversight and coordination without losing morale or institutional knowledge? What training and career pathways will prepare civil servants for supervising and improving agentic systems, and how do governments preserve service continuity during the shift?

3. Policy- and Rule-Making

From episodic to continuous, evidence-based regulation that adapts to real-world outcomes

| TL;DR: In the Agentic State, governments move from episodic regulation to continuous, evidence-based regulation that adapts in real time. Agents simulate policy before adoption, encode rules as machine-readable logic, refine parameters dynamically, and integrate continuous citizen and business feedback. This enables regulation that is more adaptive, transparent, and effective — while still preserving political oversight. The cost of inaction is growing gaps between law and life, with rules that are outdated on arrival, arbitrage by private actors, and eroding trust in governments’ ability to govern. |

Where We Are Now

Modern policy- and regulatory rule-making today operate on slow, reactive cycles. Policy-making, the high-level goal-setting typically done through legislation, sets broad aims such as ensuring fair competition, reducing emissions, or guaranteeing access to healthcare. Regulatory rule-making, the detailed implementation work of agencies, translates those aims into thresholds, formulas, procedures, and compliance requirements. Both involve creating or modifying rules that apply to everyone within their scope.

Both policy- and regulatory rule-making are intentionally deliberative and procedural. Drafts are circulated, debated, and amended. Consultations are held with stakeholders. Decisions are published and then locked in place for years, sometimes decades. Updating them usually requires reopening a full legislative or regulatory process — costly, time-consuming exercises that create a strong bias toward stability and against adaptability.

This rigidity ensures predictability and consistency but also produces critical mismatches. Benefits formulas and tax codes lag behind economic realities. Environmental thresholds fail to reflect the latest scientific data. Consumer protection rules can trail technological change by entire product cycles. Even when adjustments are made, they are based on retrospective data, political compromise, or expert judgment — rarely on real-time evidence.

The result is a gap between the speed of law and the speed of life. Citizens and businesses experience uncertainty and inconsistency: eligibility criteria that do not reflect current circumstances, compliance requirements that change too late, enforcement that comes only after harm is done. Meanwhile, global markets, supply chains, and adversaries adapt continuously. Governments remain locked in deliberative tempos that cannot keep pace.

Agentic Policy- and Rule-Making

In the Agentic State, policy no longer freezes intentions into rules that age badly. AI agents continuously translate policy goals into adaptive, real-time regulations. Legislative bodies set the ‘what’ — the outcomes society wants, such as cleaner air, fairer markets, safer workplaces — informed by the best available evidence and AI-driven analysis. Agentic systems optimise the ‘how’: proposing optimal regulations as new data comes in, stress-testing rules against live scenarios, and publishing transparent rationales for each adjustment.

Four key characteristics will enable this:

Dynamic Policy Simulation: Before enacting new policies, agents test them across synthetic populations and digital twins of social systems. These simulations stress-test distributional effects, identify unintended consequences, and reveal edge cases that human analysis might miss. Like flight simulators for regulation, they allow governments to crash-test policies safely before deployment, running thousands of scenarios to understand how rules perform under different conditions — from economic shocks to demographic shifts to adversarial exploitation.

Machine-Readable Law: Instead of ambiguous text that requires interpretation, laws are also encoded as formal, executable logic alongside their traditional prose versions (with a clear hierarchy of which version prevails in case of conflicting content). Eligibility thresholds, benefit formulas, tax calculations, and regulatory requirements become precise specifications that agents can apply consistently. At the same time, agents expand what is possible by interpreting regulation directly in natural language. The likely future is a spectrum: some rules (especially those that apply to physical persons) remain prose-only, others are enriched with structured tags and metadata to aid machine comprehension, and critical formulas or thresholds are expressed as executable code that eliminates ambiguity in routine cases.

Adaptive Rule Refinement: Agents continuously monitor how rules perform in practice, tracking compliance rates, citizen outcomes, equity metrics, and systemic impacts. When policies drift from intended effects or conditions change, agents propose adjustments within predefined boundaries — updating inflation-indexed thresholds, rebalancing regulatory parameters, or flagging issues requiring human review. Micro-updates keep regulations aligned with reality while major changes remain subject to institutional deliberation, creating a clear distinction between technical parameter adjustment and fundamental policy revision.

Participatory Intelligence: Rather than episodic consultations, continuous feedback streams inform policy refinement. Agents aggregate signals from citizen appeals, business friction reports, implementation data, and expert input, synthesising patterns that reveal when rules create unintended barriers or miss their targets. This transforms policy-making from periodic snapshots into continuous learning systems that improve through use, while maintaining democratic legitimacy through transparent reasoning and clear escalation paths for contested decisions.

“AI agents will streamline bureaucracy and transform the citizen experience by enabling truly proactive public services. Imagine the shift from repetitive form-filling to having a personal, AI-powered government concierge that anticipates your life events, provides relevant information and options, and carries out administrative tasks on your behalf. Furthermore, AI agents will increasingly combine generative and predictive capabilities, enhancing strategic decision-making in the public sector. They will enable governments to plan and manage infrastructure projects more efficiently through highly accurate forecasts of traffic, weather, and demographic trends, while also optimising healthcare systems by anticipating the future medical needs of the population.”

- Mark Boris Andrijanič, former Minister for Digital Transformation, Slovenia

Evidence from Real-World Deployments

L1–L2 — Foundations in Government: Several governments are experimenting with policy-as-code and machine-readable regulation. New Zealand’s Better Rules initiative has shown how legislation can be expressed in executable formats, allowing eligibility formulas and benefit rules to be tested before enactment. Financial regulators in the UK, Singapore, and elsewhere are piloting systems that publish regulations both as legal text and as structured logic that can be consumed by compliance software. OECD governments also increasingly use AI tools to draft regulations, analyse thousands of public consultation responses, and forecast likely impacts. These capabilities provide richer inputs and faster drafting, but final decisions remain fully human-led.

L3 — Continuous Adaptation in Practice: The private sector demonstrates that dynamic adjustment of rules at machine speed is already possible. Content moderation platforms continuously update enforcement thresholds and detection models, responding to emerging harms, appeals, and contextual factors. While controversial, these systems prove that algorithmically mediated policy adjustment can operate at global scale. In financial services, regulatory technology tools automatically interpret complex obligations across jurisdictions, update internal rules when regulations change, and provide real-time compliance guidance. Here, agents manage complete workflows under human supervision, showing how policy execution can be made adaptive without losing accountability.

L4 — Bounded Autonomy in Critical Systems: Unlike other domains where bounded autonomy is already visible, we could not identify mature L4 deployments in policy or rule-making. This absence could be telling: rule-making is a foundational activity where delegation to agents is more challenging, and where even small technical adjustments can have wide distributive consequences. Higher autonomy in this field may require shifts not only in AI capabilities but in institutional design which organisations have not explored yet.

Implementation Dynamics

Transforming policy-making from static to adaptive creates tensions that no amount of technical sophistication can eliminate. Governments must navigate fundamental questions between speed and deliberation, optimisation and politics, sovereignty and coordination. Agentic policy-making is likely to begin with technical rule-making that can be more readily changed without the intervention of the legislature.

How this plays out is dependent on the following dynamics:

Delegation and Oversight: Agentic policy systems force an uncomfortable question: which decisions can agents make autonomously, and which require human and political authority? The instinct is to draw bright lines: agents adjust technical parameters (inflation indexing), humans decide substantive questions (eligibility rules). But this distinction collapses under scrutiny. Inflation adjustments can shift millions in or out of benefit programs. Threshold tweaks can redefine market structure.

The real challenge is designing delegation frameworks that specify not just what agents can change, but under what conditions, with what monitoring, and with what triggers for human review. Unlike private platforms that can adjust policies unilaterally, government rulemaking must maintain political accountability even at machine speed. This requires new oversight mechanisms. Success means treating delegation as dynamic risk-management rather than static legal classification.

Simulation and Validation: Digital twins and policy simulators promise to reveal consequences before implementation. But simulations are only as good as their models, and all models embed assumptions about how societies function. A simulation that accurately predicts effects on the median citizen might miss impacts on edge cases. Stress tests calibrated to historical crises might fail to anticipate novel shocks.

More fundamentally, making simulation results public can create strategic behavior — actors gaming the model rather than the reality. The paradox: the more governments rely on simulation, the more they must simultaneously invest in validating models against reality, updating assumptions, and maintaining humility about what can be predicted. Policy sandboxes, controlled environments where proposed rules can be tested with real participants before full deployment, might offer a pragmatic middle ground, combining simulation's safety with real-world feedback before scaling.

Accelerated Regulatory Arbitrage: When neighboring jurisdictions, both in a regional as well as a federal sense, exercise agentic policy-making at different speeds, regulatory arbitrage accelerates. Businesses can continuously monitor jurisdictional differences and shift operations to exploit gaps faster than governments can coordinate responses. If a firm spots that one province's environmental thresholds are 10% looser, it will relocate before regulators in either jurisdiction notice the divergence.